NTFS for Mac安装在Mac中如同一款插件一般支持Mac操作系统对NTFS文件系统正常读写。

对于现在的Mac操作系统来说,经常会存在各种兼容方面的问题,所以我们在使用Mac的过程中遇到一些产品或操作无法完成需要通过其它方式来实现。NTFS for Mac就是这种情况下的产物。由于Mac系统对NTFS文件系统分区只能进行访问,所以当我们在Mac中使用的磁盘格式为NTFS时则不能存放文件,此时在Mac中安装NTFS for Mac是很好的解决方式。

由于这款软件独特的使用方式,不少用户将这款软件看做Mac 上NTFS读写插件,只要你将它成功安装便可实现操作。

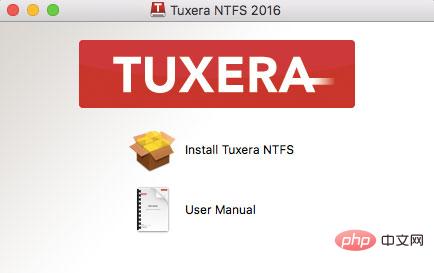

首先,大家可以在中文官网中下载这款软件,随后便可在自己的苹果电脑中安装这款软件,安装操作方法非常简单可一步到位。具体的操作方法可参考:如何安装NTFS for Mac(https://www.ntfsformac.cc/faq/rhaz-tnfm.html),这篇文章。只要成功安装,重启电脑之后可立即使用NTFS分区。

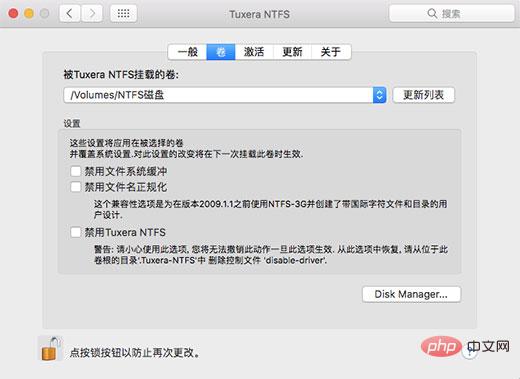

一般NTFS for Mac会自动扫描识别到操作系统中已有的NTFS分区,所以无需进行额外的操作,如同一个插件一般支持Mac系统功能,这就是这款软件的特色。同时你也可以启动这款软件查看磁盘识别情况或进行更好的设置。

启动这款软件需要在系统偏好设置中找到它。其中,在【卷】选项界面中有一个下拉按钮,所有的NTFS分区会以列表的形式呈列其中,针对每一个NTFS分区可进行单独的设置。

这款软件既是一款读写软件,也可看做一款Mac系统中不可缺少的插件,对于经常使用存储设备的朋友是一个不错的选择。完全支持Mac正常使用U盘、硬盘、软盘等一切NTFS存储设备。

推荐教程:《MacOS教程》